Knowing the origins of logical and physical data modeling concepts and techniques can help data architects and data analysts understand the field of data management and the relationship between data modeling and other data disciplines

In the early days of data processing, the entire focus of system development revolved around automating tedious manual business processes, such as order processing or accounts receivable. Thus, the main effort during the system development life cycle (SDLC) was to produce a set of programs that automated a business process. Then, the elimination of slow manual processes was the key driver for writing systems, and the data used by the systems simply was viewed as a byproduct.

As the technology and the complexity of systems grew, methodologies and modeling techniques were invented to improve the quality of the deliverables and to ensure that system developers could follow repeatable SDLC processes. Quite predictably, the first modeling techniques revolved around processes. Data flow diagrams popularized by Gane-Sarson, Ed Yourdon, and Tom Demarco modeled the business processes; system flow diagrams and structure charts, popularized by Ed Yourdon, Larry Constantine, and Meilir Page-Jones modeled the automated processes (program modules and system flow).

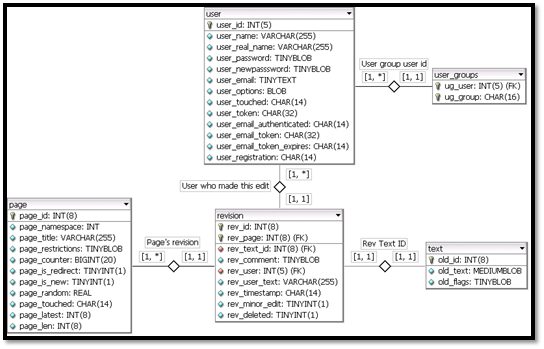

Just as process-oriented modeling techniques were becoming the standard of SDLC methodologies in the 1970s, Dr. Peter Chen broke the pattern of process-oriented system development with his invention of the entity-relationship (E/R) model, which soon became known as the logical data model. Dr. Chen’s first version of the E/R model represented entities as rectangles and relationships between entities as rhombuses (diamonds) on the relationship lines. It also graphically showed attributes (data elements) as circles attached to either entities or relationships. This notation made it possible to maintain many-to-many relationships without resolving them into associative entities the way we do today in order to accommodate the implementation of the data model in a relational database.

Significance of Data Modeling

E/R modeling was revolutionary in that, for the first time in data processing, data – not processes – were at the center of both business analysis and system design. The implications were enormous. Data could now become a reusable commodity, which meant that every unique data element was identified and inventoried once and only once. That provided the ability to track every person or program using the unique data elements for any purpose. The concepts of data-driven analysis, and later of data-driven methodologies, were born as business analysts and data modeling practitioners realized that they could finally create a business model of their organization that would logically portray a non-redundant “single version of the truth” of their enterprise in terms of its data resources. Companies created departments called data resource management (DRM) and information resource management (IRM) to manage their business data as a corporate asset, just as they managed their financial assets, fixed assets, real estate, or human resources.

At the same time, Dr. Edgar F. Codd realized that data potentially could be physically organized and stored independent of any particular automated process, so that multiple processes could share the same data. In other words, he too wanted data to be reused for multiple purposes. In his seminal article “A Relational Model of Data for Large Shared Data Banks” published by the Communications of the ACM (Volume 13 / Number 6), Dr. Codd presented the relational model for a new kind of DBMS, which was based on the mathematical set theory.

Dr. Codd’s relational model was an unordered group, or set, of items, where each item was divided into fields with atomic values. The theory was that all items would have the same structure and the same number of fields, and that each field would be restricted to contain one and only one atomic data type. In other words, fields could no longer be redefined. Furthermore, the relational model defined a set of mathematical operations and constraints that could be applied to the set of items. These relational operations could theoretically be divided into components that could be processed by independent tasks running on one or more CPUs, and even on distributed machines. No other database model at that time could offer such powerful parallel processing potential, neither IBM’s hierarchical database model (IMS), nor their network database model (IDMS).

In 1983, Christopher J. Date, who had corresponded with Dr. Codd since 1970 and later worked with him at the IBM research laboratory, joined Dr. Codd in forming the consultancy, Codd & Date International, where they advised major DBMS vendors on the direction of their relational products, and promoted the relational model through conferences and seminars world-wide. In 1985, Dr. Codd published the famous 12 rules of relational databases (actually 13 rules when counting Rule Zero).

Logical Data Modeling Concepts

The power of a relational data model is inextricably tied to the concepts of Peter Chen’s original E/R model, that is, to the logical data model. At its core is the notion of separating data from processes – from both business and system processes, for update (operational) as well as access (decision support) purposes. The logical data model achieves this separation from a business perspective, and the physical data model implements this separation from the database perspective.

Process-Independence

Separating data from processes during logical data modeling means disregarding access paths, programming languages, SQL versions, query and reporting tools, online analytical processing (OLAP) tools, and RDBMS products. All these process-dependent variables are completely immaterial during business analysis (logical data modeling), which focuses only on what data comprises the organization. These variables come into play later during database design (physical data modeling), which addresses how data will be used and, therefore, how data should be stored.

Business-Focused Data Analysis

Logical data modeling facilitates business-focused data analysis, which is quite different from the usual kind of analysis we perform during SDLC, which is system analysis. An old but very appropriate term for activities performed during system analysis is “external design” because the thought processes applied during system analysis are geared toward producing design alternatives for the database to be built. In fact, every project in which requirement definitions for a specific application are followed by design activities omits business-focused data analysis and performs only system analysis – at best.

While system analysis produces preliminary design alternatives, business-focused data analysis activities are geared toward integrating the data into a “single version of the truth.” This is accomplished by studying the business rules that govern the data, understanding the usage of the data, and uncovering existing defects in the data (e.g., business rule violations, referential integrity violations, synonyms, homonyms), irrespective of any database design or implementation method. Business-focused data analysis uses rules of normalization to build a non-redundant and fully integrated data model, which reflects the 360o view of a business.

Data Integration (single version of the truth)

Many people confuse data integration with data consolidation. Consolidating data simply means gathering data elements that identify or describe the same business entity, like customer or product, from multiple source files or source databases and storing them in one table or in a set of dependent tables. Integrating data goes far beyond that. In addition to consolidating data, integration enforces data uniqueness – the building blocks of the “single version of the truth” that enable you to reuse the same data without the need to duplicate it and without the additional development and maintenance costs of managing the duplicates. Data integration requires several actions during logical data modeling:

- Examine the definition, the semantic intent, and the domain values of each logical entity to find potential duplicates of business entities that would otherwise not be discovered because the entities are known under different names in the systems.

- Ensure that each entity instance has one and only one unique identifier (primary key), which, in turn, is never reassigned to a new entity instance even after the old instance expired and was deleted from the database.

- Use the six normalization rules to put “one fact in one place,” that is one attribute in one, and only one, owning entity. This means that an attribute can be assigned to only one entity either as an identifier of that entity or as a descriptive attribute of that and no other entity. This modeling activity ensures that each attribute is captured once and only once, and that it remains unique within the data universe of the organization. Hence, the “single version of the truth.”

- The last and most important activity of integration is to capture the business actions (or business transactions) that connect the business entities in the real world. These business actions are shown as data relationships among the entities. It is paramount to capture them from a logical business perspective (not from a reporting pattern or data access perspective) because these relationships are the basis for all potential access patterns, known and unknown, now and in the future. Business people will never ask for information about two completely unrelated business entities because any such information would be irrelevant, if not nonsensical.

Data Quality

Since the activities of logical data modeling are solely business-focused data analysis activities, they include the validation of the logical data model metadata components (i.e., entities, attributes, relationships, definitions, domains, business rules, etc.) against the existing operational data in the source files and source databases. The validation activities include asking probing questions, applying normalization rules to put “one fact in one place,” and scrutinizing definitions, domains, and semantic meanings of all entities and all attributes to ensure their uniqueness.

Performing this type of data archeology during business-focused data analysis will inadvertently expose data quality problems that would otherwise never have been detected during database design activities because database designers have neither the time nor the responsibility to perform such validation activities while they are trying to design an efficiently performing database. Therefore, logical data modeling directly contributes to improving data quality.

Conclusion

As data management professionals, it is helpful to remember the principles of logical data modeling to bring order to the existing data chaos. Data re-usability requires a “single version of the truth” about the business, which can be achieved through a combination of top-down and bottom-up data modeling activities, using both Dr. Chen’s original logical data modeling concepts as well as Dr. Codd’s six normalization rules to place each unique atomic data element into its owning entity once, and only once.